Drive Right is an effort to explore human-machine interactions for the next generation of autonomous vehicles and help improve general road safety. This project is supervised by Dr. Rahul Mangharam from the ESE (Electrical and Systems Engineering) Department at the University of Pennsylvania and is affiliated with the autonomous driving start-up Jitsik LLC.

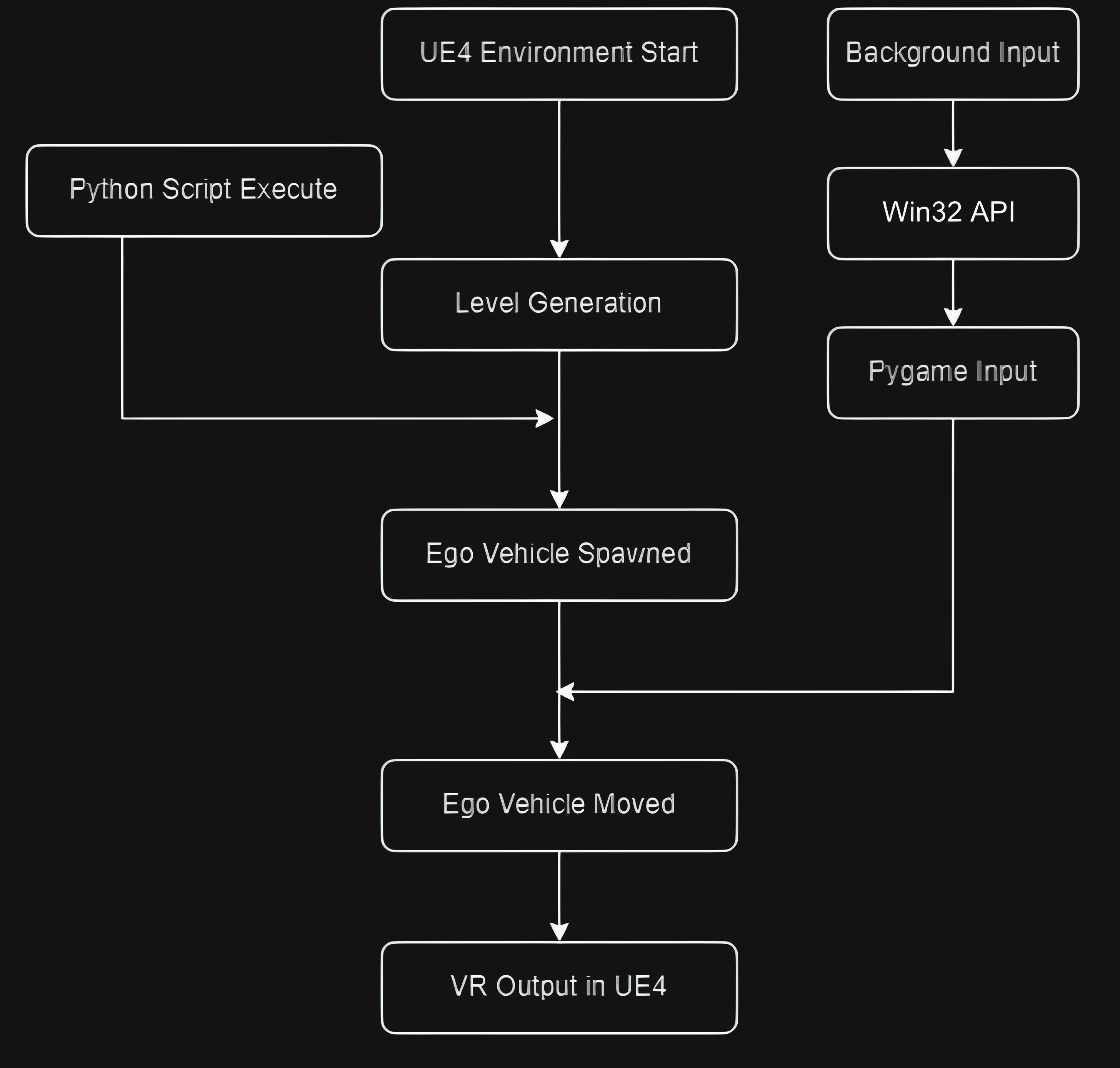

This project was developed based on CARLA, an open-source simulator for autonomous driving research based on a fork of Unreal Engine 4. The XR hardware platform of this project is Oculus Quest 2. I implemented XR functionalities for the CARLA simulator. The original CARLA simulator has two major limitations for VR development. Firstly, it uses Pygame for video output and user input. Due to the Pygame interface in CARLA, the Pygame window that pops up when executing the simulation script has to be the focus task in order to receive user input. Also, Pygame has extremely limited VR support, which makes implementing VR directly on top of Pygame almost impossible. To overcome this limitation, I restructured CARLA by rerouting the video output back to UE4, and handling the user input directly using Win32 APIs.

Moreover, all vehicle models that come with CARLA don`t have detailed interior modeling, and the shared vehicle skeleton is simple and does not have bones for the steering wheel, speedometer, tachometer, etc. The original CARLA simulator does not consider the first-person perspective, which results in a first-person perspective with extremely low fidelity. To overcome this limitation, I migrated the model and skeleton of an open-source car configuration project made by Audi and used it as the rendered mesh. I disabled the mesh rendering of models from the original CARLA project, only retaining the colliders of the original models. Then, I modified the control rig that comes with the car configuration project and programmed the animation in Blueprint.

While wearing the headset, the simulator can be controlled by a Logitech steering wheel controller. I developed a Flask server to make the rotation between the virtual steering wheel and the physical steering wheel synchronized.

A human study with 36 participants was conducted after finishing the development of the simulator. The results demonstrate that the simulator can improve participants’ understanding and awareness of the autonomous system. The participants also showed a more positive attitude in terms of the perceived risk, perceived usefulness, perceived ease of use, trust, and behavioral intention after using the simulator. The preprint paper can be found here. This project is open-sourced and the documentation can be found here.

This project is the winner of ITS World Congress 2022 Student Essay and Video Competition.

Below is the video submission:

Here are some other videos of the simulator: